OpenAI Simplifies Voice Assistant Development: 2024 Event Highlights

Table of Contents

Streamlined API Access for Voice Recognition and Natural Language Processing

OpenAI's advancements significantly improve the core components of voice assistant development: speech-to-text conversion and natural language understanding (NLU). This streamlined API access accelerates development and improves the overall user experience.

Enhanced Speech-to-Text Capabilities

The accuracy and capabilities of OpenAI's speech-to-text technology have leaped forward. This is crucial for building reliable and responsive voice assistants.

- Improved accuracy, especially in noisy environments: OpenAI's latest models demonstrate significantly improved performance in challenging acoustic conditions, making voice assistants more practical in real-world scenarios. This includes better handling of background noise, overlapping speech, and varying accents.

- Support for multiple languages and dialects: Developers can now build voice assistants capable of understanding and responding to users speaking in a wide range of languages and dialects, expanding the global reach of their applications. This multilingual support is a key differentiator in the competitive voice assistant market.

- Real-time transcription capabilities: The low latency of OpenAI's speech-to-text API allows for real-time transcription, essential for interactive voice applications where immediate responses are required. This feature is critical for applications like live captioning and real-time translation.

- Example: OpenAI's new Whisper API offers significantly faster and more accurate transcription, a crucial component for any voice assistant. Its improved performance reduces development time and enhances the overall user experience.

Advanced Natural Language Understanding (NLU)

Beyond simply transcribing speech, understanding the intent and context behind the words is paramount. OpenAI has made major strides in this area.

- Contextual awareness for more natural conversations: OpenAI's NLU models now exhibit a much higher degree of contextual awareness, leading to more natural and engaging conversations. The voice assistant can remember previous interactions and tailor its responses accordingly.

- Improved intent recognition for accurate action execution: The improved accuracy in intent recognition ensures that the voice assistant correctly interprets user requests and executes the appropriate actions. This reduces errors and improves user satisfaction.

- Enhanced entity extraction for better data processing: OpenAI's NLU models excel at extracting key information (entities) from user utterances, such as dates, times, locations, and names. This allows for more precise data processing and more accurate responses.

- Example: The new NLU models better understand nuances in language, allowing for more sophisticated responses and personalized interactions within voice assistants. This includes understanding sarcasm, humor, and implied meanings.

Pre-trained Models and Customizable Templates for Rapid Prototyping

OpenAI is accelerating OpenAI Voice Assistant Development by providing pre-built models and customizable templates, significantly reducing the time and effort required to build functional voice assistants.

Pre-built Voice Assistant Models

Developers can leverage pre-trained models to quickly build the foundation of their voice assistant.

- Ready-to-use models for common voice assistant functions (e.g., setting reminders, playing music): These pre-built models provide a head start, allowing developers to focus on unique features and differentiation rather than starting from scratch.

- Reduced development time and resources: Using pre-trained models dramatically reduces development time and resource costs, making voice assistant development accessible to a wider range of developers.

- Easy integration with existing platforms: OpenAI's models are designed for seamless integration with popular platforms and frameworks, simplifying the deployment process.

- Example: Developers can now quickly deploy a basic voice assistant using a pre-trained model and then customize it to meet specific requirements. This allows for rapid iteration and experimentation.

Customizable Templates for Faster Development

OpenAI also offers customizable templates to further streamline the development process.

- Templates for common voice assistant interactions: These templates provide a structured framework for common conversational flows, saving developers significant time and effort.

- Accelerated development cycles: By using templates, developers can iterate faster and bring their voice assistants to market more quickly.

- Focus on unique features and differentiation: Templates handle the core functionality, allowing developers to concentrate on what makes their voice assistant unique.

- Example: Developers can use pre-built templates for conversational flows, saving time on designing dialogue trees and reducing development overhead.

Improved Tools and Resources for Easier Integration and Deployment

OpenAI has significantly improved the tools and resources available to developers, making integration and deployment simpler than ever.

Comprehensive Documentation and Tutorials

OpenAI provides extensive documentation and tutorials to support developers at every stage of the process.

- Detailed guides for integrating OpenAI's voice technology: These guides provide step-by-step instructions and code examples for integrating OpenAI's APIs and models into various applications.

- Step-by-step tutorials for common use cases: OpenAI offers tutorials covering various use cases, making it easier for developers to understand how to apply the technology to their specific needs.

- Active community support for troubleshooting: A vibrant community provides support and assistance, allowing developers to quickly resolve any issues they encounter.

- Example: OpenAI provides extensive documentation with code examples to ease the integration process for developers of all skill levels.

Simplified Deployment Options

OpenAI offers flexible deployment options to suit various needs and preferences.

- Cloud-based deployment for easy scalability: Developers can easily deploy their voice assistants to the cloud, enabling scalability and accessibility.

- On-device deployment for offline functionality: For applications requiring offline functionality, OpenAI provides options for on-device deployment.

- Support for various platforms and devices: OpenAI's technology supports a wide range of platforms and devices, ensuring broad compatibility.

- Example: Developers can easily deploy their voice assistants on popular cloud platforms like AWS, Azure, and GCP.

Conclusion

OpenAI's 2024 event significantly advanced the field of voice assistant development. By providing streamlined API access, pre-trained models, and improved tools, OpenAI has lowered the barrier to entry for developers, enabling a broader range of applications and innovations. The advancements in speech-to-text, natural language understanding, and deployment options make building sophisticated voice assistants significantly easier and faster. Don't miss out on this revolution – explore OpenAI's resources and start building your own innovative voice assistant today! Embrace the future of OpenAI Voice Assistant Development and create the next generation of voice-activated experiences.

Featured Posts

-

Chat Gpt And Open Ai The Ftc Investigation And Its Impact On The Future Of Ai

Apr 22, 2025

Chat Gpt And Open Ai The Ftc Investigation And Its Impact On The Future Of Ai

Apr 22, 2025 -

Will Trumps Obamacare Supreme Court Argument Help Rfk Jr

Apr 22, 2025

Will Trumps Obamacare Supreme Court Argument Help Rfk Jr

Apr 22, 2025 -

Investigation Into Persistent Toxic Chemicals From Ohio Train Derailment

Apr 22, 2025

Investigation Into Persistent Toxic Chemicals From Ohio Train Derailment

Apr 22, 2025 -

La Fire Victims Face Exploitative Rental Prices A Selling Sunset Stars Accusation

Apr 22, 2025

La Fire Victims Face Exploitative Rental Prices A Selling Sunset Stars Accusation

Apr 22, 2025 -

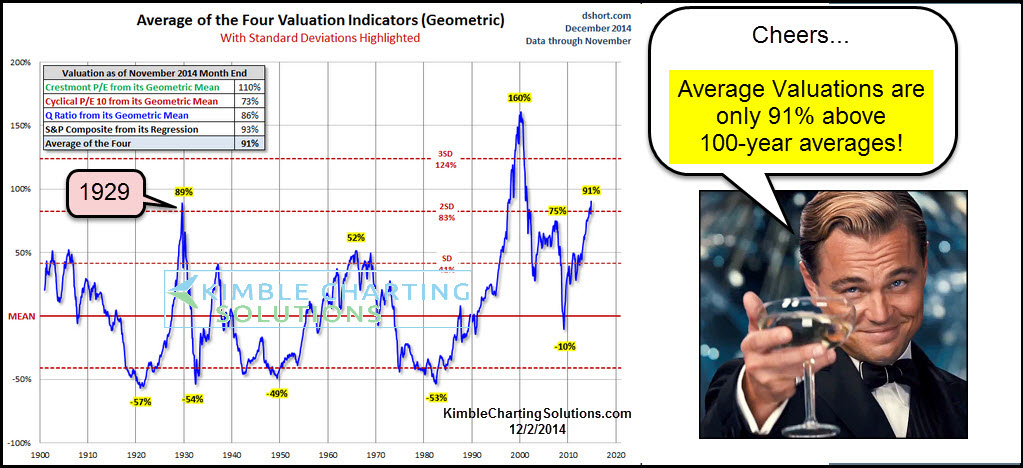

Investor Concerns About High Stock Valuations Bof As Response

Apr 22, 2025

Investor Concerns About High Stock Valuations Bof As Response

Apr 22, 2025